Overview

This ongoing humanoid robotics research framework investigates how curiosity, purpose-biased intrinsic motivation, and human feedback (“bonding” signals) can enable a humanoid robot to adapt, refine, and align its behavior within dynamic industrial environments.

Unlike scripted control pipelines, this humanoid explores and learns through novelty, human interaction cues, and an internal behavioral trait vector that shapes how it perceives, explores, and collaborates.

This framework forms the foundation of my Master’s thesis:

“Curiosity and Bonding Driven Reinforcement Learning for Adaptive Humanoid Collaboration in Industrial Environments.”

Core Idea

Industrial humanoids must handle:

- Constantly shifting workspaces

- Unpredictable human movements

- Ambiguous human cues

- Exploration without risking safety

- Trust building through behavior

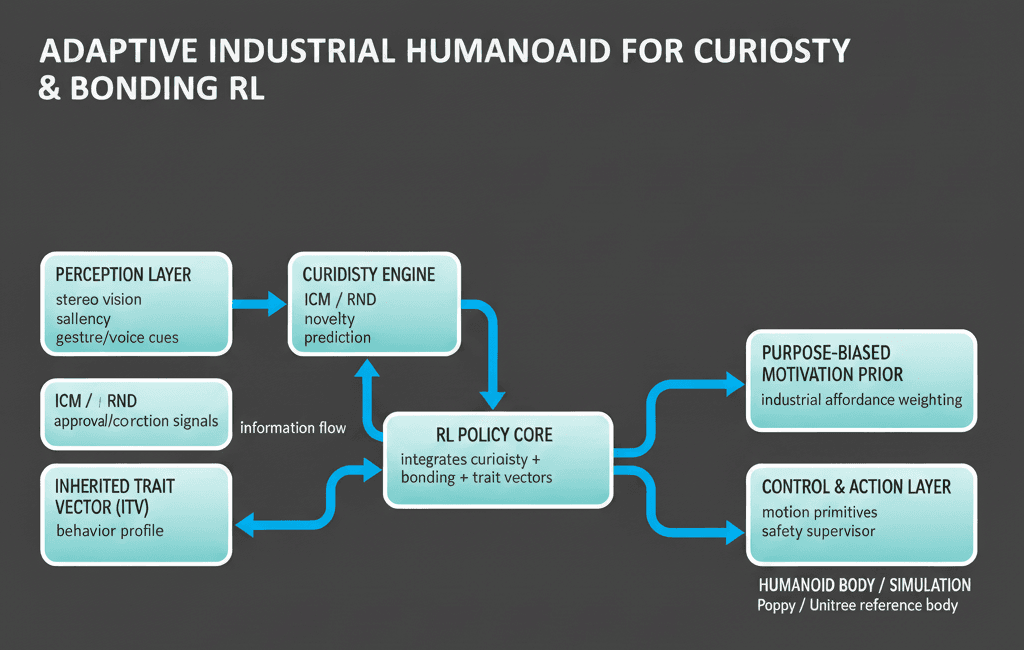

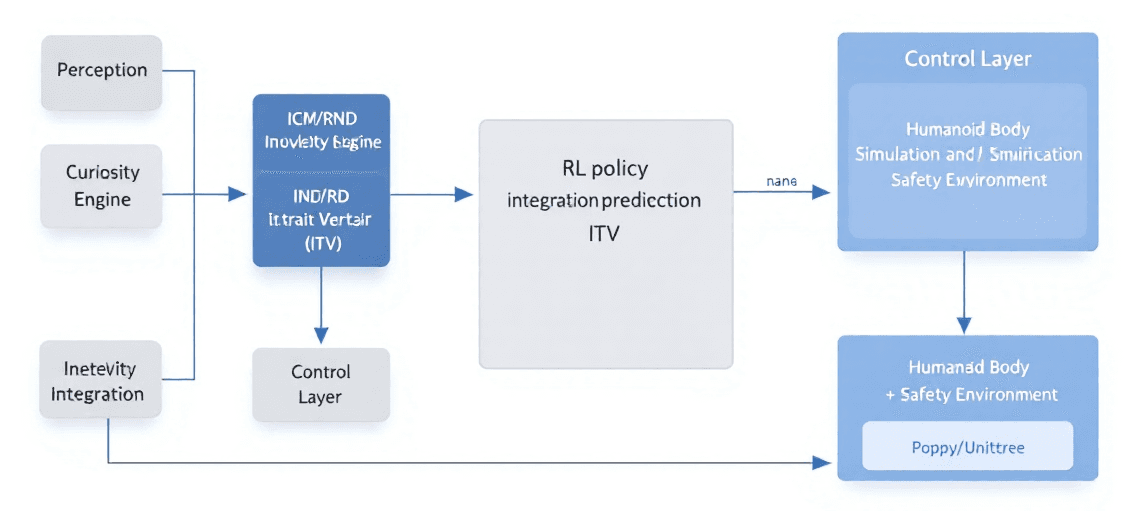

This research integrates a four-part adaptive RL system:

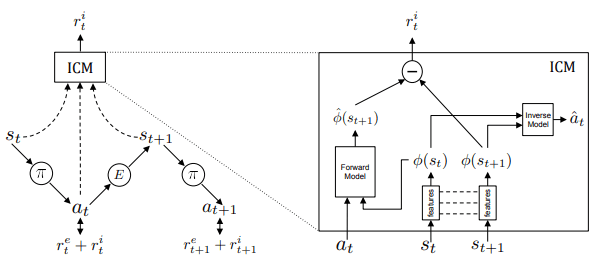

1. Intrinsic Curiosity (ICM/RND)

Internal rewards for surprising or informative transitions, enabling exploration beyond hard coded behaviors.

2. Purpose-Biased Motivation Prior

A task domain bias that aligns curiosity with industrial affordances tools, equipment, workspace geometry, rather than random actions.

3. Bonding Engine (Human Feedback)

Human gestures, voice cues, confirmations, or corrections are transformed into social reward shaping, promoting intuitive collaboration.

4. Inherited Trait Vector (ITV)

A compact behavioral profile that influences risk tolerance, approach distance, proactivity, and exploration style.

Together, these allow the humanoid to:

- Explore more intelligently

- Adapt RL policies through human cues

- Maintain safe behavior

- Refine industrial task skills over time

- Synchronize with human collaborators

System Architecture

Hardware & Simulation Bodies

This framework spans three humanoid platforms:

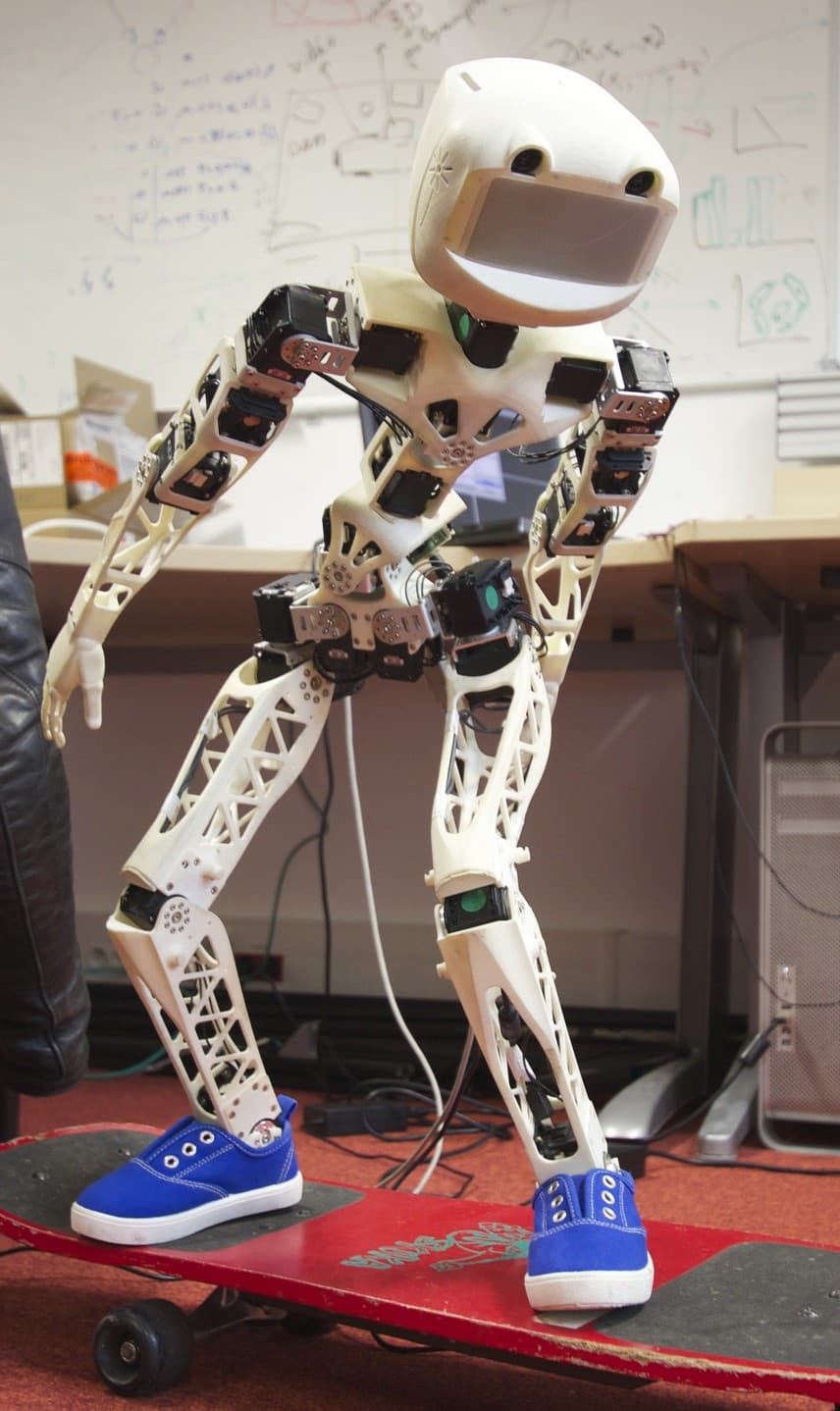

-

Poppy Humanoid (open-source, modular research body)

https://www.poppy-project.org/en/robots/poppy-humanoid -

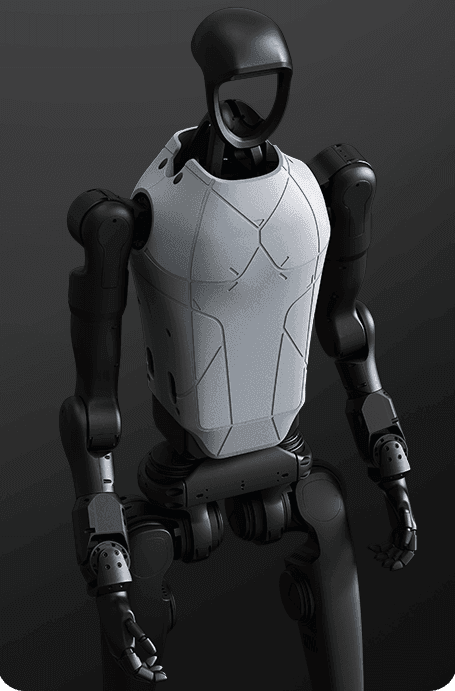

Unitree H1 / H1-Lite (industrial humanoid reference)

https://www.unitree.com/mobile/h1/ -

Custom lightweight humanoid prototype

This enables scalable testing across locomotion, manipulation, perception, and social feedback conditions.

Software Stack

- ROS 2 Humble – distributed control and modular nodes

- PyTorch – RL agents, curiosity models, and ITV learning

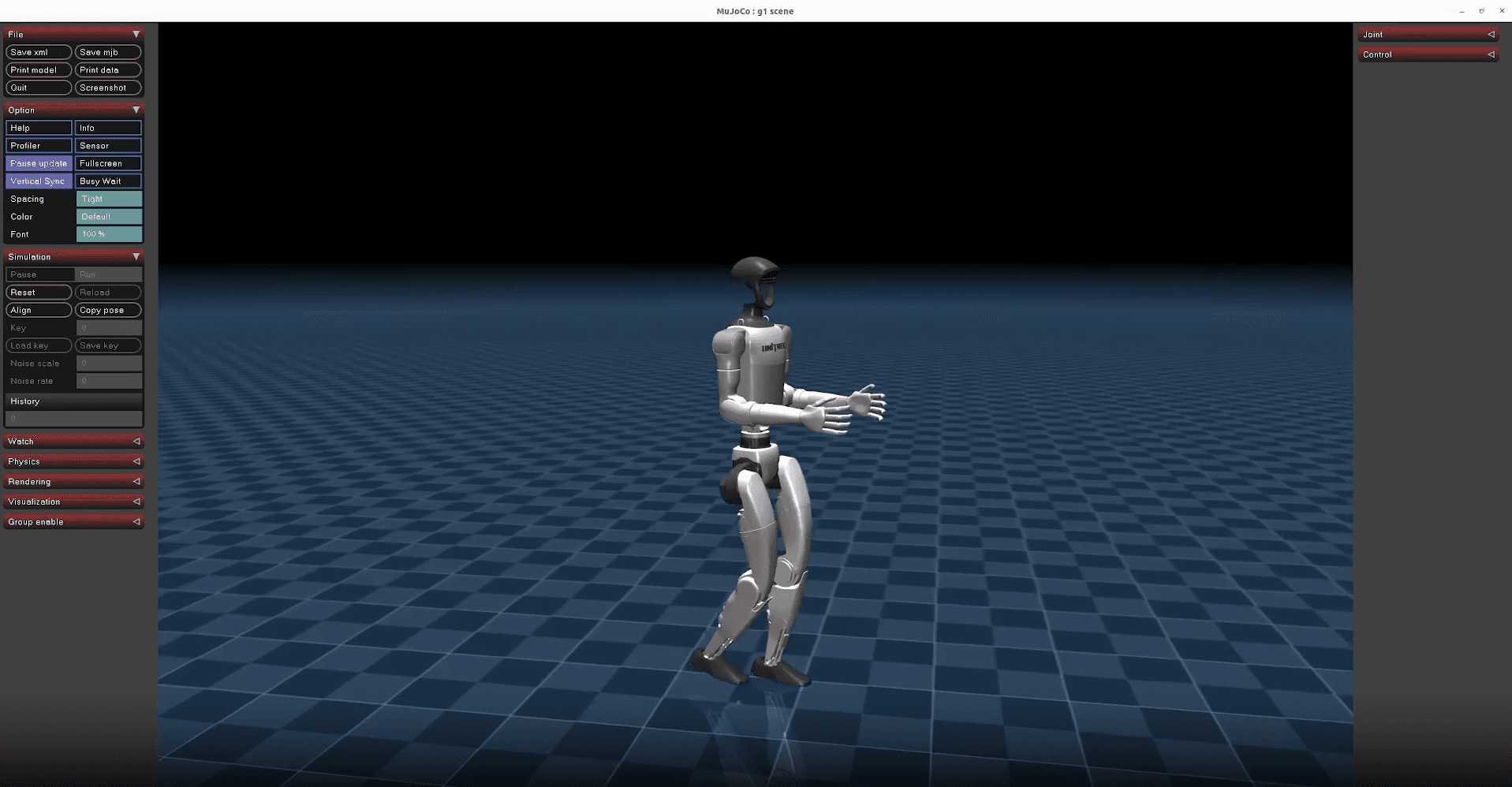

- Gazebo / MuJoCo / Isaac sim – full humanoid simulation

- OpenCV – saliency, stereo depth, gesture cues

- Audio models – speech & cue detection for bonding signals

- C++/Python ROS2 nodes – perception, reward shaping, control graphs

Subsystems Developed

Curiosity Engine

ICM/RND with industrial-purpose weighting to avoid meaningless exploration.

Bonding Engine

Gesture/voice processing → reward pulses for human-aligned decision shaping.

Inherited Trait Vector (ITV)

A trait layer driving risk posture, exploration, proactivity, and cooperation tendencies.

Perception Layer

- Stereo depth

- Attention/saliency

- Workspace monitoring

- Gesture recognition

- Voice/intent detection

Control & Behavior Layer

- Action primitives

- Safety-first control graphs

- Policy-conditioned motion generation

- Interpretable behavior models

Current State of Research

Completed:

- Curiosity–bonding–ITV RL architecture

- Saliency + stereo depth perception stack

- Gesture/voice feedback reward injection

- Early RL testing through Poppy & Unitree models

- Modular CAD for custom humanoid prototype

- ROS2 ↔ PyTorch policy interface

Next phase: closed-loop RL experiments integrating curiosity, bonding, and ITV for task acquisition.

Why This Research Matters

Industrial humanoids need:

- Autonomy

- Adaptive behavior

- Perception-driven intelligence

- Safe exploration

- Trust-building

This framework demonstrates how RL, curiosity, perception, and human feedback can unify into a single architecture for future industrial humanoid coworkers.

It integrates cognitive robotics, social signal processing, and reinforcement learning into a practical, scalable framework.

References

-

Du, Y. et al., 2025. Learning Human-Humanoid Coordination for Collaborative Object Carrying.

https://arxiv.org/abs/2510.14293 -

Kerzel, M. et al., 2023. NICOL: A Neuro-inspired Collaborative Semi-humanoid Robot.

https://arxiv.org/abs/2305.08528 -

Puig, X. et al., 2023. Habitat 3.0: A Co-Habitat for Humans, Avatars and Robots.

https://arxiv.org/abs/2310.13724 -

Pathak, D. et al., 2017. Curiosity-driven Exploration by Self-supervised Prediction.

https://arxiv.org/abs/1705.05363