Team

This project was developed collaboratively as part of ROS2 Case Study

at Deggendorf Institute of Technology.

Team Members:

- Ruchit Bhanushali — ROS2 Integration, Motion Planning, MoveIt2, Simulation

- Siddharth Ahuja — Perception

- Sahil Gore — Dataset & YOLOv8 Model

- Kaung Sett Thu — RealSense Calibration & Localization

Overview

This project implements a vision-based automated vegetable sorting system using:

- UR3e Collaborative Robotic Arm

- Robotiq 2F-140 Parallel Gripper

- Intel RealSense D435i RGB-D Camera

- YOLOv8 for object detection

- ROS2 + MoveIt2 for motion planning and control

The system detects carrots, tomatoes, and potatoes, performs 3D localization, computes grasp pose, and executes autonomous pick-and-place.

The goal is to build a fully automated, data-driven sorting pipeline optimized for accuracy, gentle grasping, and industrial throughput.

Problem Statement

-

Manual sorting suffers from:

- Inconsistent quality & human error

- Labor dependency and rising costs

- Limited throughput

- Product damage

- Lack of intelligent automation

Our system solves these limitations through robotic manipulation + AI-driven perception.

System Proposal

AI-powered UR3e-based vegetable sorting, capable of:

- real-time detection

- stable 3D localization

- adaptive grasping

- repeatable automation

Hardware Setup

UR3e Collaborative Arm

- 6-DOF, force-controlled, reliable for pick-and-place.

Robotiq 2F-140 Gripper

- Adaptive parallel motion, 140 mm opening for variable vegetable sizes.

Intel RealSense D435i

- RGB-D sensing + IMU for real-time depth & pose estimation.

ROS2 Workstation

- Runs YOLOv8 inference, MoveIt2 planning, TF frames, and control nodes.

Software Stack

- ROS2 Humble — Core middleware

- YOLOv8 — Real-time vegetable detection

- MoveIt2 — Grasp generation + trajectory planning

- RViz2 — Visualization & simulation

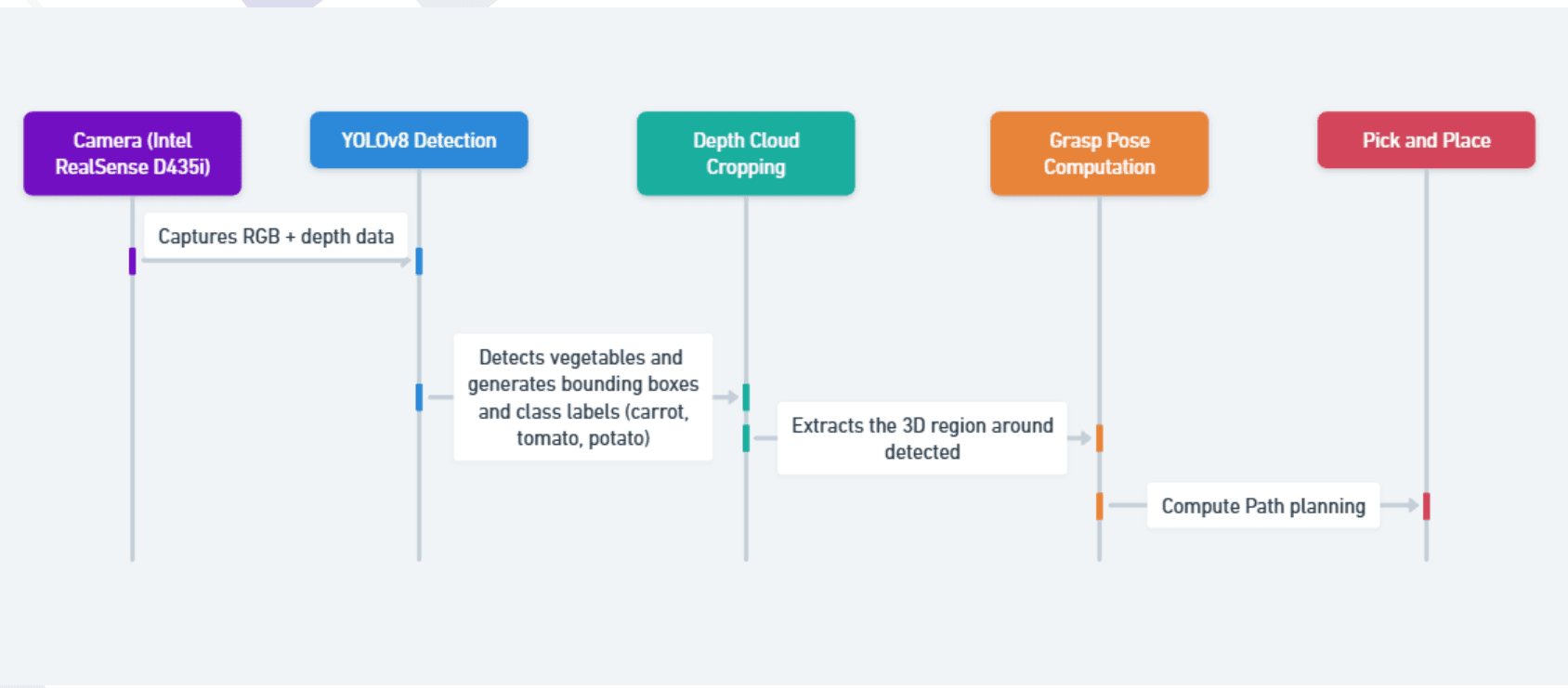

Data Flow Pipeline

- RGB-D Capture (RealSense D435i)

- YOLOv8 Detection

- Depth Cloud Cropping

- Grasp Pose Computation

- Pick-and-Place Planning

- UR3e Execution

This creates a fully automated loop from perception to actuation.

Dataset

- Public Roboflow dataset

- 683 images

- Augmented for model robustness

- Classes: carrot, tomato, potato

Current Status

Completed:

- YOLOv8 vegetable detection

- Depth cloud processing

- UR3e simulation in RViz & MoveIt2

- Object localization

- Basic pick-and-place execution

Next Steps:

- Real-world execution on the UR3e

- Final calibration & validation

- Full documentation

References

-

Spanu, A. et al., 2023. Vision-Based Robotic Sorting System for Agricultural Products. Politecnico di Torino.

https://webthesis.biblio.polito.it/33164/ -

Iftikhar, M. et al., 2024. Computer Vision as a Tool to Support Quality Control and Robotic Handling of Fruit: A Case Study.

https://www.researchgate.net/publication/385205971 -

Wu, Q. et al., 2023. Vegetable Disease Detection Using an Improved YOLOv8 Algorithm in Greenhouse Plant Environments.

https://www.researchgate.net/publication/378371592